By James Guilmart, Intel

As storage medias, protocols, architectures and form factors have evolved over the past several decades, one component has remained consistent: the RAID HBA. Whether using HDDs or SSDs, SATA or SAS, M.2 or U.2, the RAID HBA has cemented itself as the preferred RAID implementation… until now.

A fundamental shift in the balance between storage device performance and PCI Express® (PCIe®) architecture connections may have finally broken the hardware RAID model. The adoption of NVM Express® (NVMe®) storage over newer generations of PCIe architecture connection means storage is faster than ever. The revolutionary performance increase seen by the NVMe protocol, especially when applied to next generation media types such as Intel Optane or Micron X100 SSDs, has the potential to overpower the static RAID HBA design. This is primarily due to RAID HBA hardware constraints causing uplink bottlenecks and limited processing power:

- Uplink Bottlenecks: RAID HBAs connect to the platform via PCIe lanes, typically x8. This means throughput to the storage devices is limited to this connection, which was enough bandwidth for legacy devices. Not so for NVMe SSDs, which require x4 PCIe lanes each for full performance. Any more than two or three devices can saturate this uplink

- Limited Processing Power: RAID HBAs use on card cores for RAID calculations (e.g. RAID5 Parity calculations). This is a fixed amount of processing power. With NVMe SSDs, the number of IOPs and subsequent RAID calculations can fully consume these cores, causing increased IO wait times and reduced efficiency. In effect, the RAID HBA cores are overpowered by the storage workload.

What does this mean for the next generation of NVMe RAID? How must RAID evolve in order to provide a more flexible model that can scale with the performance of faster NVMe devices?

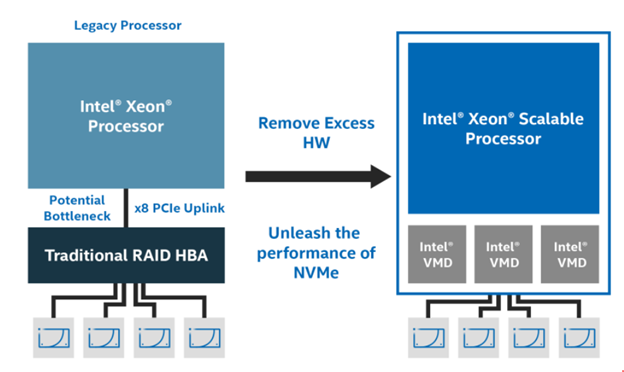

The answer: CPU-direct-attached storage with platform integrated and hybrid RAID. The HBA limitations need to be removed from the architecture to bring storage closer to the CPU and allow applications to benefit from performance increases, without any bottlenecks.

This does not mean Software (or OS-based) RAID, which has been available for a while with limited adoption. This type of RAID is purely managed at the OS host level, meaning no storage isolation and risks to platform stability. In addition, because the OS needs to be running for the RAID solution to be active, these implementations are not bootable with no Pre-OS/UEFI management.

Instead, integrated and hybrid RAID solutions need to be developed to combine the direct attached performance potential of Software RAID with the robust enterprise functionality of RAID HBAs. For example, the NVMe technology ecosystem around Intel Xeon Scalable Processors is implementing this type of RAID architecture by using Intel Volume Management Device (Intel VMD) hardware in the CPU combined with Intel VROC RAID drivers for a better NVMe RAID experience. Intel VMD is a redesign of the PCIe root complex that acts like an integrated HBA on silicon to make a robust NVMe SSD connection point that delivers storage isolation, error handling and enterprise storage features like hot-plug and LED management. Then Intel VROC drivers manage these direct connect SSDs to combine full SSD bandwidth with RAID management. With the dependency to Intel Xeon, this type of solution is designed in at the platform level and provides a RAID architecture that is natively available within server designs, no addition hardware needed.

Figure 1. The Intel Xeon NVMe/PCIe ecosystem uses Intel VMD HW and Intel VROC drivers to integrate the RAID HBA functionality into the platform.

Integrated, hybrid RAID solutions will be required to enable complete transition to NVMe storage by fully addressing the shortcomings of RAID HBAs:

- Direct Attached Storage: Uplink bottlenecks are removed, in exchange for SSDs directly connected to the CPU, without sacrificing storage isolation and reliability.

- Scalable Processing: Leveraging CPU processing for RAID has traditionally been a negative. However, with the increased processing power and efficiency of today’s Data Center CPUs, these simple RAID calculations (e.g. XOR for parity) are simple for these high performing cores to handle. They are perfect for the millions of IOPS achievable by multiple NVMe SSDs. In addition, these cores are multi-purpose, so they can manage RAID operations when storage workloads are at their peak, and then scale over to other activities once complete.

One industry example of this new RAID implementation is Intel Virtual RAID on CPU (Intel® VROC), which uses a silicon integrated feature known as Intel Volume Management Device (VMD) to support an HBA-less RAID solution:

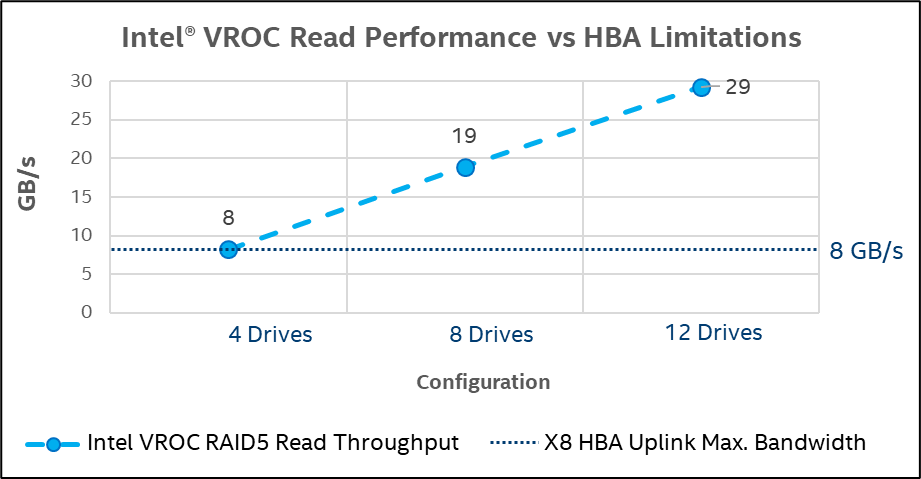

The effectiveness of integrated, hybrid RAID architectures can be seen through performance scaling to higher SSD count RAID arrays. The performance chart below shows Intel VROC RAID5 Read Bandwidth for 4, 8, and 12 SSD Arrays, which scales performance up to 29 GB/s. The performance of RAID HBAs does not even need to be collected. The physical limitations of the architecture are enough evidence to show how RAID HBAs are ineffective for NVMe SSDs. The typical RAID HBA has a x8 PCIe lane uplink. For PCIe 3.0 technology, this means a maximum throughput of 8 GB/s. Regardless of the number of SSDs in the RAID array, 8 GB/s will be the maximum performance this architecture can achieve.

Figure 2. Read performance of Intel VROC compared to the throughput limitations (bottleneck) of RAID HBAs.

Moving forward, RAID HBAs can leverage some new design changes in order to improve this bottleneck. PCIe 4.0 technology based RAID HBAs for use on PCIe 4.0 capable platforms will effectively double this throughput limitation. However, the SSDs themselves will also double in performance, so integrated RAID solutions like Intel VROC will see a similar increase, and the RAID HBA throttling will still exist. In addition, the uplink may be increased from x8 PCIe lanes to x16 PCIe lanes, again doubling the potential throughout of the HBA. This does not eliminate the bottleneck, but just increases it, still below the potential of integrate RAID solutions. In addition, for the HBA to manage this larger uplink, changes to the card design will be required (e.g. more processing cores) to keep up, which means more cost to the HBA construction. In comparison, Integrated RAID solutions take this complexity out of the equation. The faster PCIe connections on the platform/CPU, match up with the PCIe connection speeds of the directly connected NVMe SSDs to deliver effective storage performance. As new platforms and new PCIe specification generation are introduced, this integrated RAID architecture will inherently follow. All this is achieved by removing RAID HBA HW, which also means removing hardware cost, power, heat and space requirements from the platform.

Integrated NVMe RAID solutions are somewhat new to the market, and over the next few years will see substantial improvements to their feature portfolios (e.g. Out-of-band management or Self-Encrypting Drive Key Management Support), making them even more compelling. This is a necessary change to bring the full potential of NVMe SSDs to user workloads. Therefore, major players are investing in these technologies to balance processing and storage innovations and create future Data Center and Cloud deployments that are ready to tackle the upcoming demands of an increasingly digital world.

Figure 2-

System configuration: Intel® Server Board S2600WFT family, Intel® Xeon® 8170 Series Processors, 26cores@ 2.1GHz, RAM 192GB, BIOS Release 7/09/2018, BIOS Version: SE5C620.86B.00.01.0014.070920180847

OS: RedHat* Linux 7.4, kernel- 3.10.0-693.33.1.el7.x86_64, mdadm – v4.0 – 2018-01-26 Intel build: RSTe_5.4_WW4.5, Intel ® VROC Pre-OS version 5.3.0.1039,

NVMe SSDs: Intel® SSD DC P4510 Series 2TB drive firmware: VDV10131, Retimer

BIOS setting: Hyper-threading enabled, Package C-State set to C6 (non retention state) and Processor C6 set to enabled, P-States set to default and SpeedStep and Turbo are enabled

Workload Generator: FIO 3.3, RANDOM: Workers-32, IOdepth- 128, No Filesystem, CPU Affinitized

Software and workloads used in performance tests may have been optimized for performance only on Intel microprocessors. Performance tests, such as SYSmark and MobileMark, are measured using specific computer systems, components, software, operations and functions. Any change to any of those factors may cause the results to vary. You should consult other information and performance tests to assist you in fully evaluating your contemplated purchases, including the performance of that product when combined with other products. For more complete information visit www.intel.com/benchmarks.

Performance results are based on testing as of February 13, 2019 and may not reflect all publicly available security updates. See configuration disclosure for details. No product can be absolutely secure.